Emily M. Bender: Artificial Intelligence’ Isn’t a Tool — It’s an Ideology Opposed to Community, Connection, Equity, and Sustainability

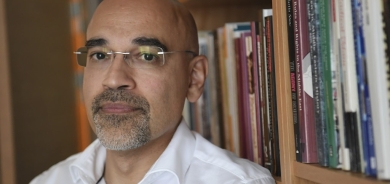

Emily M. Bender has been a faculty member at the University of Washington since 2003, where she currently serves as the Thomas L. and Margo G. Wyckoff Endowed Professor in the Department of Linguistics. She directs the Computational Linguistics Laboratory and the CLMS program, and holds adjunct positions in both the School of Computer Science and Engineering and the Information School. She is also affiliated with UW’s Tech Policy Lab, RAISE, and the Value Sensitive Design Lab. Her research spans multilingual grammar engineering, the interface between linguistics and NLP, the societal impacts of language technology, and sociolinguistic variation. She leads the development of the LinGO Grammar Matrix and has authored two widely used books introducing linguistic concepts to NLP practitioners. In recent years, her work has focused on ethics, data documentation, and the societal implications of emerging technologies. She has served in leadership roles in the ACL, including as President (2022–2025), and is a Fellow of the AAAS. Before joining UW, she held positions at Stanford and UC Berkeley and worked in industry. She received her PhD in Linguistics from Stanford University.

Gulan: Professor Bender, you have been one of the most prominent critical voices warning against the overhyping of large language models. When you hear political leaders and tech executives framing AI as the key to “a new industrial revolution,” what do you see as the real promises—and what are the exaggerated claims we should be more skeptical about?

Emily M. Bender: First and foremost, if the people saying this have not defined the term “AI” they are making empty and meaningless statements. “Artificial intelligence” does not refer to a coherent set of technologies, and neither are any of the things that get marketed that way anything like what popular culture has painted “artificial intelligence” to be. It is always worth being clear about what we are talking about. It’s not “artificial intelligence” but it might be automation. (I say might be, because there are many cases of supposed AI systems actually being people working behind the scenes.) The next step is getting specific about what is being automated.

A lot of the excitement these days is around systems for creating synthetic text, that is, large language models. What’s being automated here is mimicry of how people use language. The danger here is that people will mistake a machine that can output text that looks like something that a doctor or lawyer or teacher or therapist or scientist would say as a machine that can do the work of any of those professionals.

Gulan: As countries race to regulate AI, we see efforts in the European Union (AI Act), the United States, and China, each with their own priorities. From your perspective, what kind of global governance model for AI would be realistic—and what dangers arise if regulation remains fragmented along national or bloc-based lines?

Emily M. Bender: Since “AI” isn’t one thing, it doesn’t make sense to regulate “AI”. More generally, it is important to keep the focus of regulation on the people and organizations that are actually acting in the world, not the (imagined) “AI” systems they are building. Furthermore, it makes sense to look at existing regulations and see which rights and protections need shoring up in light of new technology and existing excesses and abuses by companies and governments. These are primarily rights in the domains of privacy, environmental protections, and worker organizing. Regulators should also think in terms of protecting the information ecosystem from synthetic media--the text, image and video that can be cheaply created with large language models, text to image models and text to video models--and strengthening information infrastructure, such as means of validating authentic media.

Gulan: You co-authored the landmark paper “On the Dangers of Stochastic Parrots”. In today’s geopolitical climate, what do you see as the most urgent security risks of generative AI—whether disinformation, cyberattacks, or erosion of trust in institutions—and how can policymakers address them without stifling genuine innovation?

Emily M. Bender: Unchecked spills of synthetic text and other media into our information ecosystem are dangerous. They make it harder for people to find trustworthy information and to trust such sources even when they find them.

Furthermore, the “regulation stifles innovation” trope is false and unhelpful. I encourage policymakers to think of regulation as a means to channel innovation away from extractive technologies that function to amass wealth and power in the hands of the few and towards technology that is broadly beneficial.

Gulan: AI is often portrayed as both a driver of economic transformation and a potential source of inequality. Do you think the benefits of AI innovation are likely to be distributed globally, or do you foresee a scenario where advanced economies monopolize the gains while developing countries bear the risks?

Emily M. Bender: I reject the premise of this question, not least because “AI innovation” doesn’t refer to anything. I do think it’s clear that there are people getting wealthy right now off of selling various technologies as “AI” and they are clustered in already rich countries. Meanwhile, people in the Majority World are being exploited for data work as well as suffering in extractive mining economies to produce the metals and other resources to build the hardware that is used in training large models.

Gulan: Much of AI is still built around English and a handful of other dominant languages. How do you view the future of linguistic diversity in AI, and what risks of “digital colonialism” emerge if emerging technologies fail to properly represent smaller or marginalized languages?

Emily M. Bender: I have been advocating for truly multilingual work on language technology for many years. In particular, there is a problem within research on language technology that anything applied to English is considered general, whereas work on any other language is considered language-specific. It is for this reason that I coined what has come to be know as the #BenderRule -- always name the language that the research is on, even/especially when it is English.

More generally, however, it is vitally important that language communities maintain control of data in their language and the technologies built with it. Te Hiku Media provides a very nice example of how to achieve this.

Gulan: Many governments in the Middle East and Global South are investing heavily in digital transformation and AI for governance, healthcare, and security. From your standpoint, what should these countries be most cautious about when adopting AI systems built primarily in Silicon Valley or China?

Emily M. Bender: Regardless of where the systems are built, countries should be very cautious about adopting automation for any kind of critical systems. Synthetic text extruding machines (aka LLMs) in particular are being sold as time and money saving approaches to governance and social services, but they are anything but. Above and beyond that, I would encourage countries to avoid sending their resources (money and data) to multinational companies. What a tremendous waste to use healthcare funds, for example, to pay some foreign company for a machine that pretends to be a doctor rather than to provide healthcare. Even if the software is being offered for free, it isn’t. These companies are desperate for data, and not above mining, for example, biometric information from people in need.

Gulan: One of your recurring warnings is that AI systems don’t “understand” language the way humans do. How can we design governance and oversight structures that keep human accountability at the center, rather than outsourcing critical decisions to opaque algorithms?

Emily M. Bender: I believe this is relatively simple: Apply existing regulation that holds people, companies and governments accountable for decisions they make. It doesn’t matter if they roll dice, read tea leaves, or ask a chatbot for direction, they are still making the decisions.

What’s not simple is pushing back against the extremely well-funded hype that the tech companies are pushing to encourage everyone, including policymakers, to imagine that their stochastic parrots are artificial beings that will come and save us all. (It is for this reason that Alex Hanna and I wrote our book The AI Con, to help people identify and reject the hype.)

Gulan: Looking ahead to the 2030s, do you expect artificial intelligence to be a tool that strengthens global cooperation and human progress—or do you think it is more likely to deepen existing geopolitical rivalries and inequalities?

Emily M. Bender: “Artificial intelligence” isn’t a tool -- it’s an ideology and one that is in opposition to the values of community, connection, equity and sustainability.